Motion Analysis of Musical Performances: Towards a Pedagogical Tool for Expressivity

Why is it possible to distinguish a musician’s personal style and expression from another one? Why do some musicians move in a more theatrical way than others?

Students at CIRMMT are interested in studying performers’ movement kinematics, that is how they use their body to communicate their musical intentions, in order to distinguish recurrent motion patterns that are related to the musical structure from those that are part of their personal signature.

In the process of learning and practicing a piece of music, musicians acquire a familiarity with the excerpt, and interiorize their personal interpretation of what the music tells them and how it guides their body movements. This process is also very important since it will be reflected in the perception and appreciation of audience members. Understanding further musicians’ movement and gestural expression can also help design and implement pedagogical software to provide systematic feedback to student-performers.

The high precision of the 3D motion capture Qualisys system along with non-intrusive reflective markers allowed us to track with great accuracy musicians’ fast and skillful movements in order to understand the relationships between their movements and the sound parameters. The system is used to analyze the body movements of performing musicians, which offers an insight into how they rely on movements to convey expressive information, and create a motion database that can help design new pedagogical tools. This software could be used in the context of instrumental lessons to help student-musicians develop accurate awareness of their movements and make relationships between their gestures and the sound.

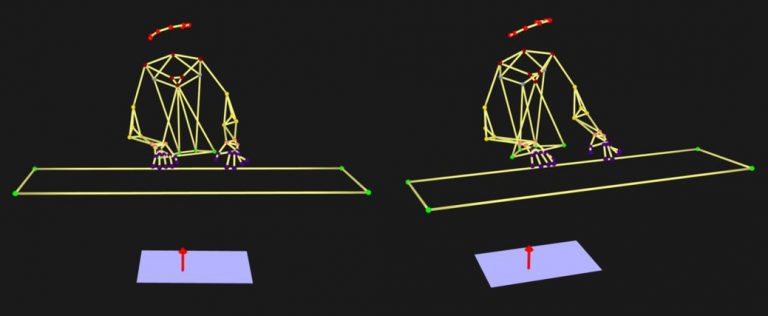

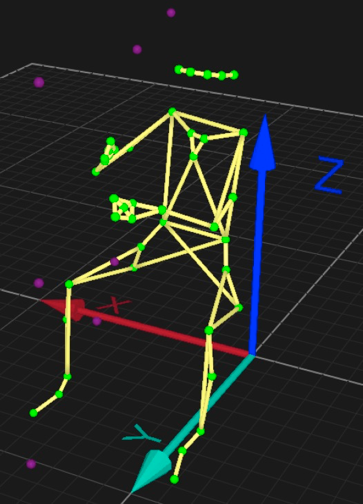

49 reflective markers are placed on every pianist according to a definite Plug in Gait with arms, hands and upper body model, which gives a precise description of the musicians’ body posture.

Data are captured at a rate of 240 frames per second. We extract specific kinematic features, such as amplitude and velocity of motion, from expert pianists’ performances.

Motion data are collected with a 17-camera Qualisys motion capture system. The data streams of MIDI piano keyboard, the videocamera, and of the mocap cameras are synchronized and time stamped.

The absolute time stamping is implemented by the SMPTE clock generator (Rosendahl Nanosyncs HD).

Changes in timing and dynamics are also calculated by recording and synchronizing the audio to the motion capture data streams, alongside the force applied on the piano stool, which is measured with a force plate.

Fine and precise movements such as finger motion can also be measured with several cameras angled toward the piano keyboard.

QTM allows you to display the force data represented by the red arrow. The force signal is converted using the Qualisys analog interface.

Developing New Tools for Musicians: Kinematic Analysis of Gestural Affordance in Instrumental Performance

Motion analysis is a fundamental component in the research and design of new musical instruments and performance paradigms. Using motion capture, CIRMMT researchers are able to record and analyze the movement and gestures of instrumental performance, and prototype new models of gestural interaction.

One such project, Harp Gesture Acquisition for the Control of Audiovisual Synthesis, used a motion capture analysis of concert harpists to create a vocabulary of performative gestures. These gestures were then mapped to input from wireless motion acquisition devices worn by performer, allowing them to use their natural body movement to control external audio and visual processes during a live performance.

CIRMMT Motion Capture Researchers: (l to r) Catherine Massie-Laberge, John Sullivan and Alex Nieva